Llama Free Stock Photo Public Domain Pictures

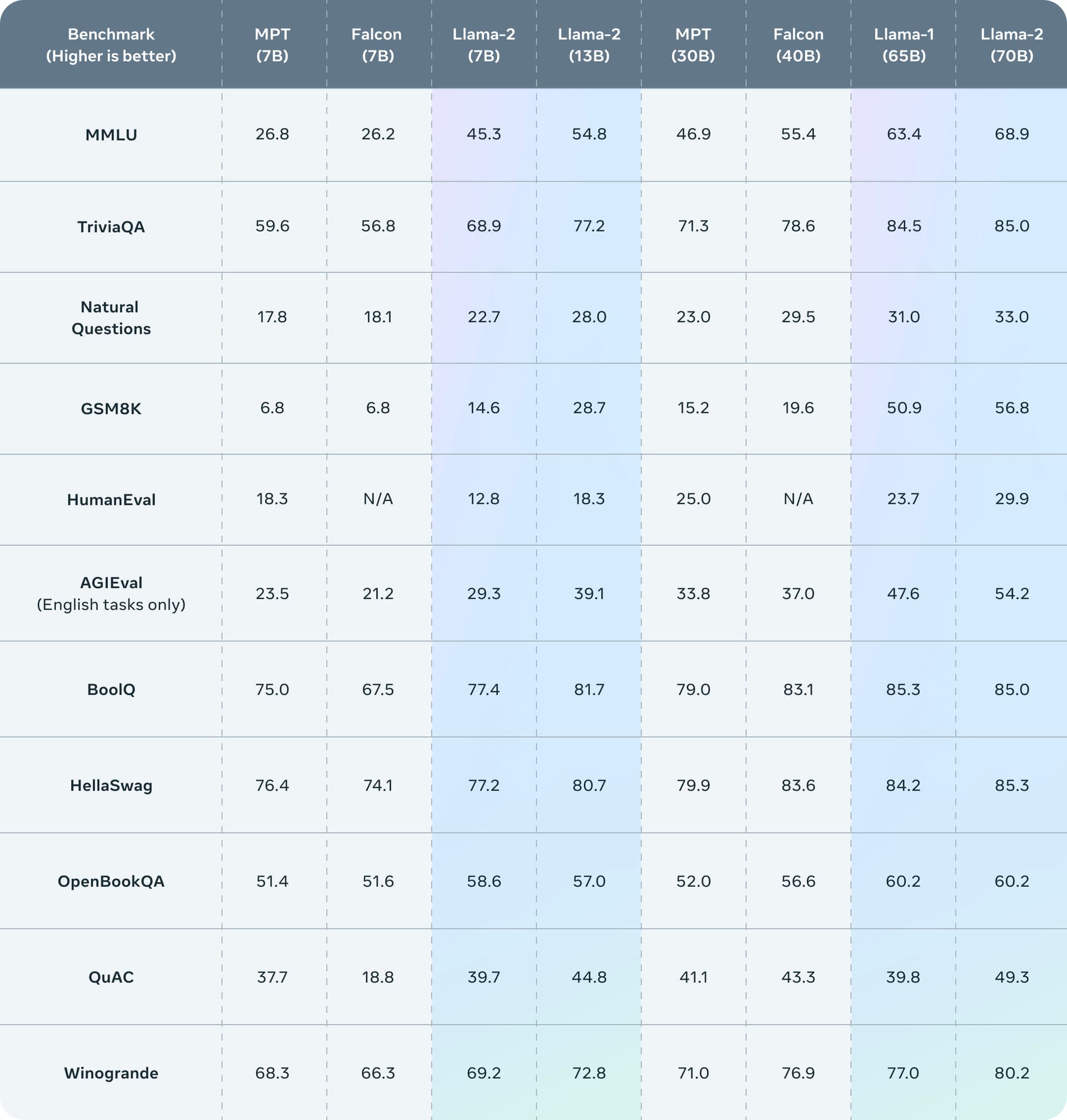

We introduce LLaMA, a collection of foundation language models ranging from 7B to 65B parameters. We train our models on trillions of tokens, and show that it is possible to train state-of-the-art models using publicly available datasets exclusively, without resorting to proprietary and inaccessible datasets. In particular, LLaMA-13B outperforms GPT-3 (175B) on most benchmarks, and LLaMA-65B.

Meta, 상업적 사용이 가능한 오픈소스 언어 모델 LLaMA 2 공개 (7B, 13B, 70B / 4k context) 읽을거리&정보공유 파이토치 한국 사용자 모임

We have a broad range of supporters around the world who believe in our open approach to today's AI — companies that have given early feedback and are excited to build with Llama 2, cloud providers that will include the model as part of their offering to customers, researchers committed to doing research with the model, and people across tech, academia, and policy who see the benefits of.

Meta and Microsoft Introduce Llama 2 Language Model for AI • iPhone in Canada Blog

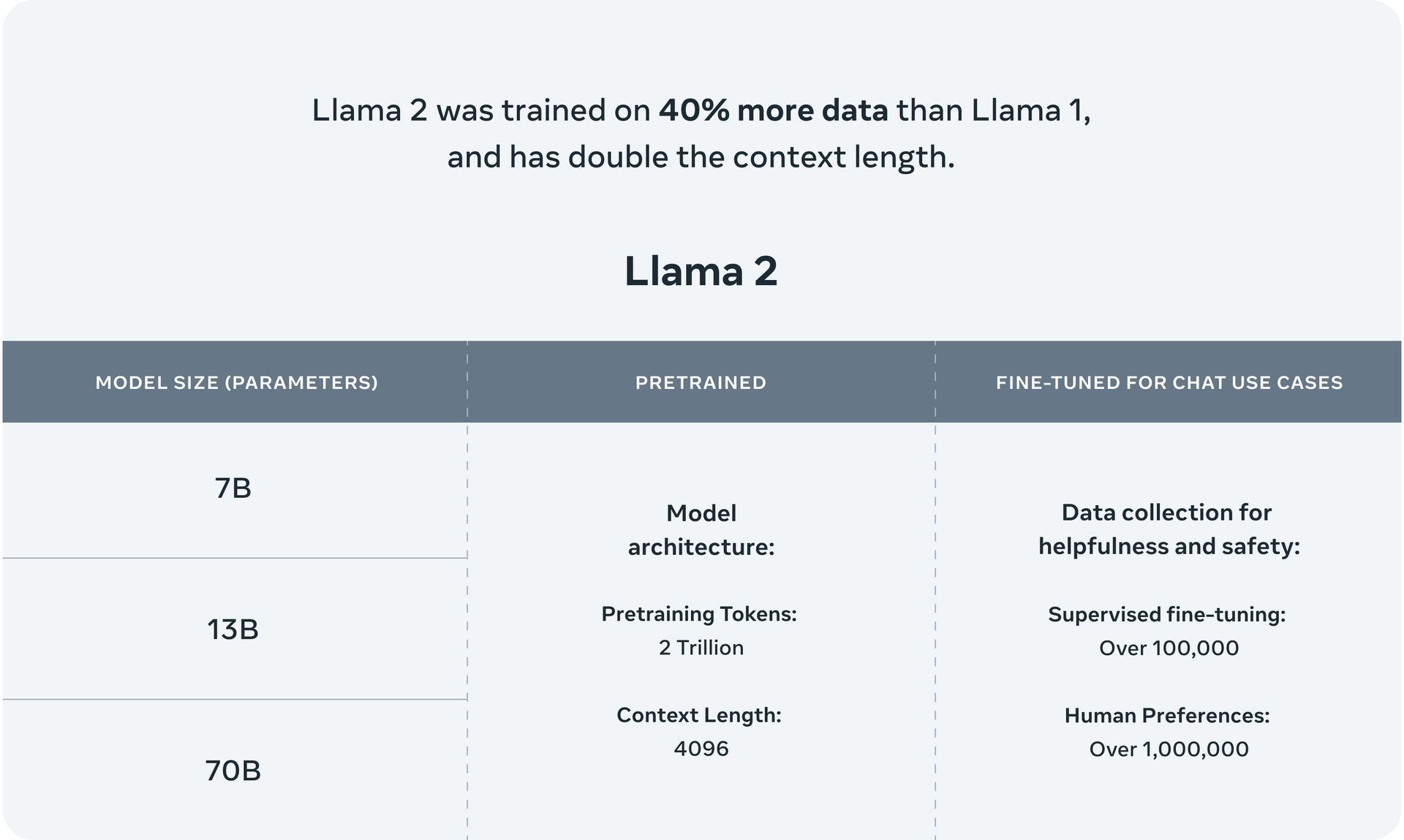

Llama 2 is a large language AI model comprising a collection of models capable of generating text and code in response to prompts.. Open Foundation and Fine-Tuned Chat Models paper ; Meta's Llama 2 webpage ; Meta's Llama 2 Model Card webpage ; Model Architecture: Architecture Type: Transformer Network Architecture: Llama 2 Model version: N/A.

llama 2 paper ChatGPT für Unternehmen

We present TinyLlama, a compact 1.1B language model pretrained on around 1 trillion tokens for approximately 3 epochs. Building on the architecture and tokenizer of Llama 2, TinyLlama leverages various advances contributed by the open-source community (e.g., FlashAttention), achieving better computational efficiency. Despite its relatively small size, TinyLlama demonstrates remarkable.

llama icon Clip Art Library

arxiv:2307.09288 Llama 2: Open Foundation and Fine-Tuned Chat Models Published on Jul 18, 2023 · Featured in Daily Papers on Jul 19, 2023 Authors: Hugo Touvron , Louis Martin , Kevin Stone , Peter Albert , Amjad Almahairi , Yasmine Babaei , Nikolay Bashlykov , Soumya Batra , Prajjwal Bhargava , Shruti Bhosale , Dan Bikel , Lukas Blecher ,

Llama Llama Llama Or is it llama llama duck? Ed Schipul Flickr

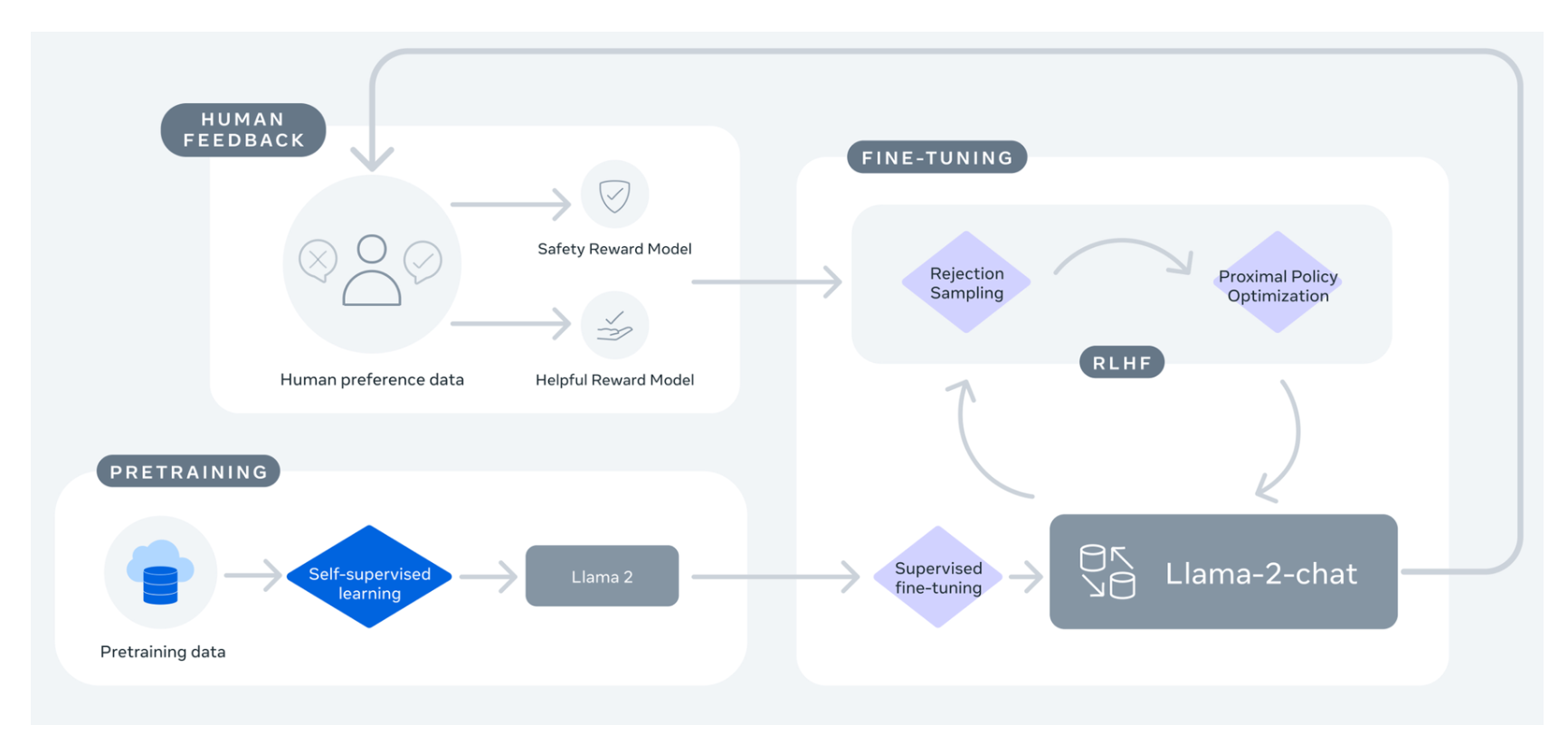

Llama 2-Chat: Fine-Tuning. Llama 2-Chat, optimized for dialogue use cases, is the result of several months of research and iterative applications of alignment techniques, including both instruction tuning and Reinforcement Learning with Human Feedback (RLHF), requiring significant computational and annotation resources. (The paper describes this paper in very detail and verbose, I will just.

Meta Llama 2 Paper

This work develops and releases Llama 2, a collection of pretrained and fine-tuned large language models (LLMs) ranging in scale from 7 billion to 70 billion parameters, which may be a suitable substitute for closed-source models. In this work, we develop and release Llama 2, a collection of pretrained and fine-tuned large language models (LLMs) ranging in scale from 7 billion to 70 billion.

This Is Why You Can’t Use Llama2 by John Adeojo AI Mind

Llama 2 is a family of state-of-the-art open-access large language models released by Meta today, and we're excited to fully support the launch with comprehensive integration in Hugging Face.. This template follows the model's training procedure, as described in the Llama 2 paper. We can use any system_prompt we want, but it's crucial that.

Llama paper By DigitalDesignsAndArt TheHungryJPEG

Melanie Kambadur Aurelien Rodriguez Guillaume WenzekFrancisco Guzmán In this work, we develop and release Llama 2, a collection of pretrained and fine-tuned large language models (LLMs) ranging in scale from 7 billion to.

What's new in Llama 2 & how to run it locally AGI Sphere

Published on 08/23/23 Updated on 10/11/23 Llama 1 vs. Llama 2: Meta's Genius Breakthrough in AI Architecture | Research Paper Breakdown First thing's first: We actually broke down the Llama-2 paper in the video above. In it, we turn seventy-eight pages of reading into fewer than fifteen minutes of watching.

Llama paper By DigitalDesignsAndArt TheHungryJPEG

Papers Explained 60: Llama 2 Ritvik Rastogi · Follow Published in DAIR.AI · 6 min read · Oct 9, 2023 -- Llama 2 is a collection of pretrained and fine-tuned large language models (LLMs).

Llama Paper Flowers Llama Party Decorations Llama Etsy Paper flower arrangements, Paper

In this work, we develop and release Llama 2, a collection of pretrained and fine-tuned large language models (LLMs) ranging in scale from 7 billion to 70 billion parameters.. Additionally, this paper contributes a thorough description of our fine-tuning methodology and approach to improving LLM safety. We hope that this openness will enable.

Llama 2 Paper

The paper describes the training process for the chat variant of llama-2: Llama 2 is pretrained using publicly available online sources. An initial version of Llama 2-Chat is created.

Hello! I'm glad you liked it! Of course it is fine if you translate and share it with the link

About Large language model Llama 2: open source, free for research and commercial use We're unlocking the power of these large language models. Our latest version of Llama - Llama 2 - is now accessible to individuals, creators, researchers, and businesses so they can experiment, innovate, and scale their ideas responsibly.

Llama Llama Live! Pittsburgh Official Ticket Source Byham Theater Sat, Jan 14, 2023

As reported in the appendix of the LLaMA 2 paper, the primary architectural differences from the original model are increased context length and grouped-query attention (GQA). The context window was doubled in size, from 2048 to 4096 tokens. This longer process window enables the model to produce and process far more information.

Llama Pair White Free Stock Photo Public Domain Pictures

We release Code Llama, a family of large language models for code based on Llama 2 providing state-of-the-art performance among open models, infilling capabilities, support for large input contexts, and zero-shot instruction following ability for programming tasks.